05. Getting captureMode As Soon As Your App Starts

Overview

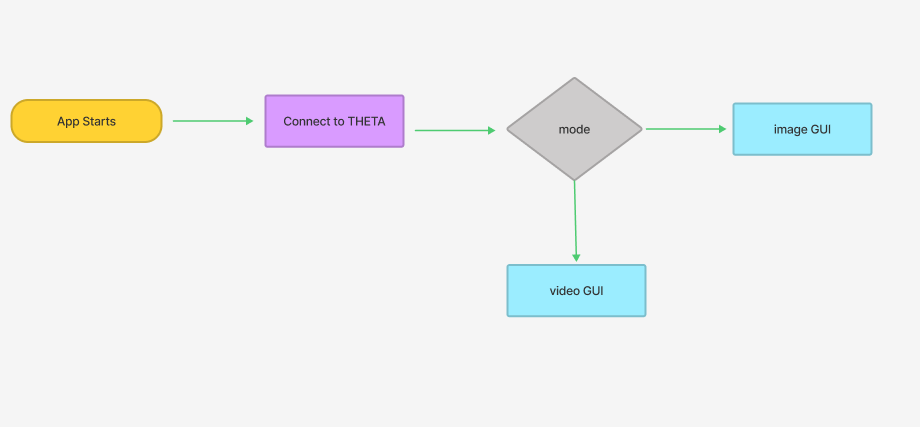

The objective of this tutorial is to change the application's button to either picture or video mode depending on the camera's initial state. This app is simple, it gets the captureMode as soon as the application builds.

This is the first of three tutorials focusing on getting camera mode. For this first one, the output appears in the debug console.

The app is multi-bloc using 3 blocs total: Image Screen, Video Screen, and Camera Mode. Both the Image Screen and the Video Screen Blocs access the Camera Use bloc. In this way, the Camera Use bloc connects the two screens together. The Camera Use bloc accesses the camera's mode so that each of the screens will change depending on the mode of the physical camera. The app gets modeevent and getpictureevent.

Your application should be able to switch between the image and video modes for the best user experience.

Main Resources

Getting captureMode as soon as the application builds

In order to implement this feature, the GetModeEvent runs under the BlocBuilder in the main file. Every time the project builds, the application gets the mode of the camera.

child: MaterialApp(

home: BlocBuilder<CameraUseBloc, CameraUseState>(

builder: (context, state) {

context.read<CameraUseBloc>().add(GetModeEvent());}))

Next, if the mode is equal to image, the application will display the ImageScreen. Alternatively, if the mode is equal to video, the application will display the VideoScreen. Else, there is a RefreshScreen.

if (state.captureMode == 'image') {

return const ImageScreen();

} else if (state.captureMode == 'video') {

return const VideoScreen();

} else {

return const RefreshScreen();

}

Showing the thumbnail

An IconButton displays the last thumbnail for an image. The variable inside of the State called showImage is set to true inside of the GetPictureEvent. If the showImage variable is true and there is a fileUrl, the application is intended to display the thumbnail image.

return Expanded(

child: context.watch<CameraUseBloc>().state.showImage &&

context.watch<CameraUseBloc>().state.fileUrl.isNotEmpty

? InkWell(

child: Image.network('${state.fileUrl}?type=thumb'),

)

: Text('response goes here '));

However, this was not the case as the GetModeEvent runs after the code rebuilds. The GetModeEvent overrides the state and emits showImage as false. Thus, when the IconButton is expected to display the image, the application just displays the Text.

The current solution is to check if showImage is true within the GetModeEvent and then run the code to get the fileUrl. Next, emit the State with showImage set to true and the fileUrl. Although this solution showed the thumbnail image, the code is lengthy and not the best implementation of Bloc structure.

on<GetModeEvent>((event, emit) async {

var response = await thetaService.command({

'name': 'camera.getOptions',

'parameters': {

'optionNames': ['captureMode']

}

});

...

if (state.showImage) {

...

var fileUrl = convertResponse['results']['entries'][0]['fileUrl'];

emit(CameraUseState(

message: response.bodyString,

captureMode: captureMode,

fileUrl: fileUrl,

showImage: true));

Using BLoC to manage state

The project is separated into three separate Blocs(camera_use, image_screen, and video_screen). The camera_use Bloc holds the GetModeEvent, the image_screen Bloc holds the TakePicEvent and GetPicEvent, and the video_screen has the StartCaptureEvent and StopCaptureEvent.

When the video starts/stops, the IconButton changes shape to match the video's State.

Although the application has three separate Blocs, it calls two Blocs on one screen. For example, for the image screen, the button for getting the camera mode calls the CameraUseBloc.

IconButton(

onPressed: () {

context.read<CameraUseBloc>().add(GetModeEvent());

// captureMode = state.captureMode;

},

icon: Icon(Icons.refresh),

),

Contrastly, the button for taking the picture calls the ImageScreenBloc.

IconButton(

iconSize: 200,

onPressed: () {

context.read<ImageScreenBloc>().add(ImageTakePicEvent());

},

icon: Icon(

Icons.circle_outlined,

)),

Below is a diagram illustrating the flow of the Bloc structure. Both the Image Screen and the Video Screen Blocs access the Camera Use Bloc. In this way, the Camera Use Bloc connects the two screens together. The Camera Use Bloc accesses the camera's mode so that each of the screens will change depending on the mode of the physical camera.

Although the application calls multiple Blocs in one screen, it successfully performs the functionality as expected and gets the camera's mode.

Here is a test shot taken with the app on the RICOH THETA X at 5.5K resolution.